Efficiency and Innovation: The Impact of Retrieval-Augmented Generation

Retrieval-Augmented Generation (RAG), an AI and natural language processing breakthrough, is one of today’s innovative prodigies. It is this bleeding-edge technology comprising the confluence of the two models: the retrieval-based model and the generative one, which not only opens up a new era for creativity but to a whole new set of innovative developments. It’s an exciting development that reshapes landscape AI-driven content generation. But what precisely is RAG? How does it influence this terrain? Let’s delve into the depths of this transformative concept.

Understanding Retrieval-Augmented Generation

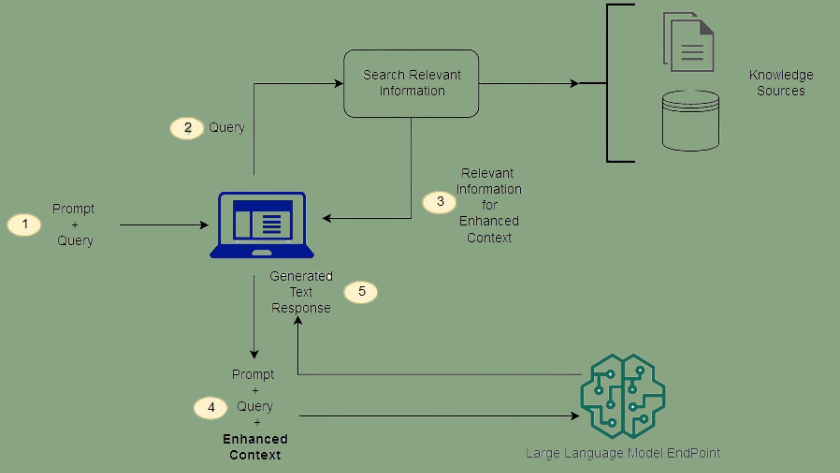

Retrieval-Augmented Generation, at its core, is a seamless integration of two distinct AI methodologies: generation and retrieval. The retrieval models exhibit exemplary ability in data selection and extraction from huge records which are stored in the metadata. On the contrary, generative models rely on prompts provided by the users to create new, magnificent content. This combination of methods transcends the boundaries of restrictions that are normally prevalent in regular generative models, such that they become a more resourceful option capable of generating creative content.

The Mechanics Behind RAG

Envision this scenario: you are assigned the task of creating a thrilling article about the intricacies of outer space investigation. Now, instead of beginning with a blank paper, you develop a focused prompt for the retrieval model-“Space Exploration Milestones.” The retrieval model kicks into action, crawling through vast databases tirelessly and retrieving relevant content. The generative component, once it retrieves the content, synthesizes it into a cohesive and original narrative: this is the power where it goes full throttle. This is how you acquire a superb, integrated article overflowing with information!

How Is RAG Different From Fine-Tuning?

Retrieval-Augmented Generation (RAG) and fine-tuning both contribute to the advancement of AI-driven content generation. However, they function on fundamentally distinct principles — each presenting unique benefits and applications.

In machine learning, we commonly employ fine-tuning which involves training a pre-existing model on a given task or dataset. The objective? To adapt its parameters and optimize performance which enhances our system’s understanding and generation capabilities. In the domain of natural language processing (NLP), it is standard practice to take a trained language model – for instance, OpenAI’s GPT – and fine tune it through domain-specific data sets. This bolsters its comprehension within that particular sphere.

In contrast, a RAG tool employs a hybrid approach: it amalgamates retrieval and generation methodologies. While fine-tuning mainly focuses on polishing the generative part of the model through task-specific training, RAG adds to the process by incorporating a retriever component. Instead of the RAG depending on an internal knowledge base and training data alone, the RAG augments its scope by incorporating external knowledge bases through information search mechanisms.

Unleashing Creativity with RAG

Retrieval-Augmented Generation fascinates with its ability to augment human creativity: it harnesses a vast reservoir of knowledge within the retrieval model. Content creators, thus armed, effortlessly explore diverse perspectives and unearth obscure facts; they craft compelling narratives- generate innovative solutions in factoring their way into research endeavors. RAG becomes not just useful but essential as an instrument for pushing imagination’s boundaries forward.

Benefits of Using RAG With LLMs

The marriage between Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) unlocks myriad benefits that surpass conventional approaches. This presents an opportunity for content creators, researchers even; they can reach unparalleled levels of creativity and efficiency, all while deepening their knowledge base.

Enhanced Contextual Understanding:

Large Language Models (LLMs) revolutionize natural language processing by encapsulating vast linguistic knowledge and patterns. However, often they operate in isolation without direct access to external sources of information. Integrating RAG with LLMs overcomes this limitation. By facilitating access to external information via retrieval mechanisms, RAG enhances the contextual understanding of LLMs. This symbiotic relationship empowers LLMs to produce content which is linguistically rich, contextually relevant, and grounded in real-world knowledge.

Streamlined Research and Content Creation:

For instance, in the areas of journalism, academia and marketing, the ability to collate and organize information with agility is important. RAG combined with LLM facilitates research and content creation as there is an automatic collection of the information and the process of structuring it into organized chunks. What RAG offers the content writers right here is the chance to quickly obtain useful information, explore varied perspectives, and produce stories that are sufficiently attractive and compelling for the audience.

Tailored Personalization and Adaptability:

In an era dominated by personalized experiences and tailored content, one’s ability to adapt and cater to individual preferences emerges as invaluable. RAG with LLMs stands out in this aspect: it provides unparalleled levels of personalization. Through strategic utilization of retrieval-based mechanisms, LLMs leverage access to user-specific data and preferences; importantly, they generate content that resonates with individual tastes and interests. RAG with LLMs equips organizations to forge profound connections with their audiences. It delivers personalized recommendations, crafts targeted marketing campaigns, and facilitates adaptive learning experiences.

Retrieval-Augmented Generation, a beacon of creativity and ingenuity, emerges in our innovation-driven world. By seamlessly melding retrieval and generation techniques — an act akin to magic — RAG unlocks doors to limitless possibilities. As we set foot on this transformative path: let us not only celebrate its potential but also stay vigilant in ensuring that AI utilization remains ethical.